Get Started with Large Language Models on Google Cloud

Explore the realm of Large Language Models on Google Cloud, focusing on the setup of your Google Cloud account and the use of Vertex AI Studio.

This guide simplifies the process, presenting an entry point to Large Language Models (LLMs) for those unfamiliar with machine learning or AI. It highlights the transformative impact of LLMs in AI interactions, showcasing their ability to produce text that closely resembles human writing.

By leveraging Google Cloud’s Vertex AI and Python, developers can seamlessly incorporate sophisticated AI functionalities into their projects.

Setting Up Your Google Cloud Account

Setting up your Google Cloud Account involves a few straightforward steps, designed to get you quickly up and running with the advanced capabilities of Large Language Models (LLMs) through Vertex AI. Here’s how you can start:

Creating a Google Cloud Account

- Free Credits: Google Cloud may offer free credits to new or certain users, which can be a substantial benefit as you explore and use various services. Check if you’re eligible for any promotional offers that apply to new accounts or specific products within Google Cloud.

- Starting Point: If you’re not already using Google services, the first step is to create a free Gmail account. This Gmail account serves as your gateway to Google Cloud and all its resources. Once your Gmail account is set up, proceed to the Google Cloud website to initiate the creation of your Google Cloud account. Follow the on-screen instructions to complete the setup, which typically involves verifying your identity and agreeing to the terms of service.

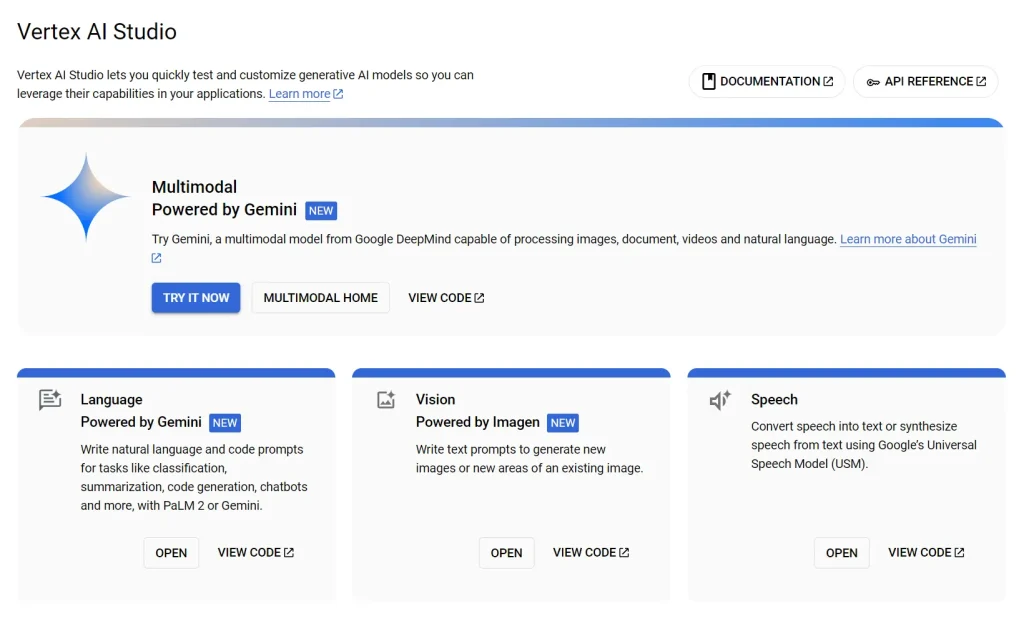

Navigating to Vertex AI Generative AI Studio

- Vertex AI Generative AI Studio is a specialized section within Google Cloud, dedicated to working with generative AI models, including LLMs. This studio is designed to be user-friendly, catering to both those who are new to machine learning and seasoned experts.

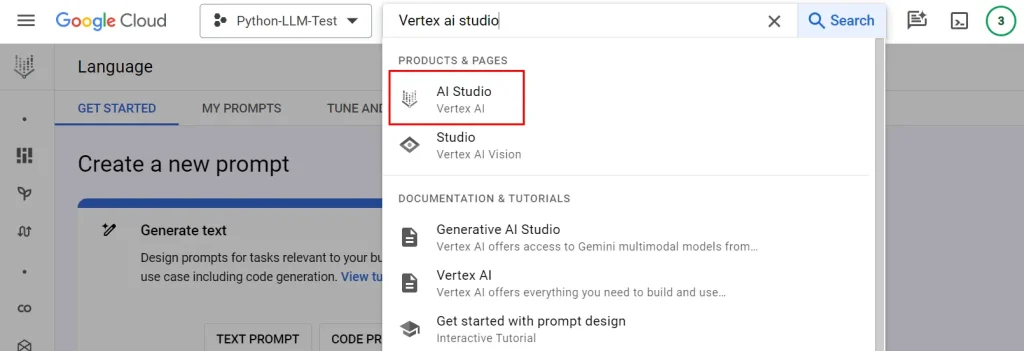

- To access the Vertex AI Generative AI Studio, log into your Google Cloud Console. Look for the “AI and Machine Learning” section or use the search bar to find “Vertex AI.” Once in the Vertex AI section, locate the Generative AI Studio option. This studio offers various tools and pre-built models that you can experiment with, providing a hands-on approach to learning and utilizing AI technologies.

- The platform guides you through the process of creating and deploying AI models, including selecting the type of model you want to work with (e.g., text, code, or embedding models) and setting up your first experiments. It’s a practical environment where you can directly apply LLMs to your projects, with extensive documentation and support available to assist you along the way.

Setting Up Your Environment

You want to code in your favorite environment like VS Code or the Google Colab platform? No problem follow these steps and code where you prefer to code.

Step 1: Create Service Credentials

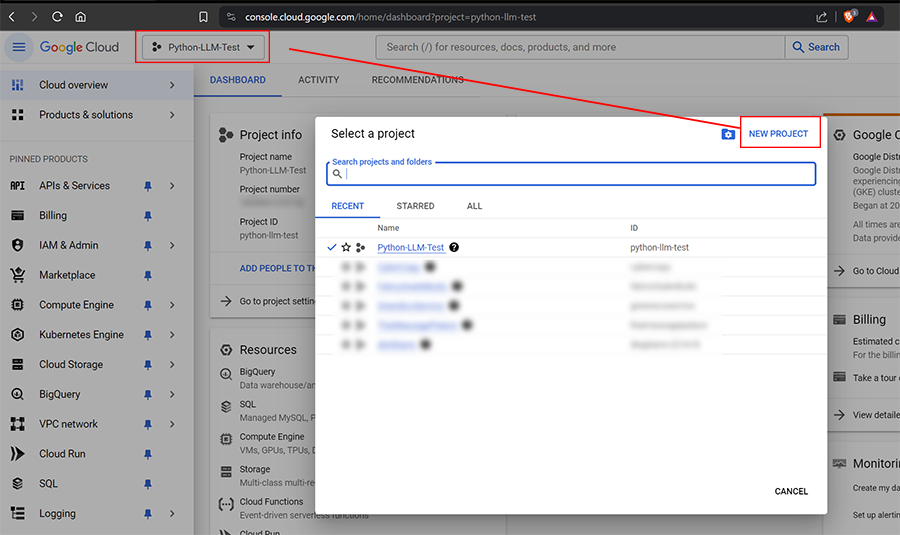

- Navigate to the Google Cloud Console: Log in to your Google Cloud account and access the Google Cloud Console through your web browser. Create a new Project by clicking “New Project”

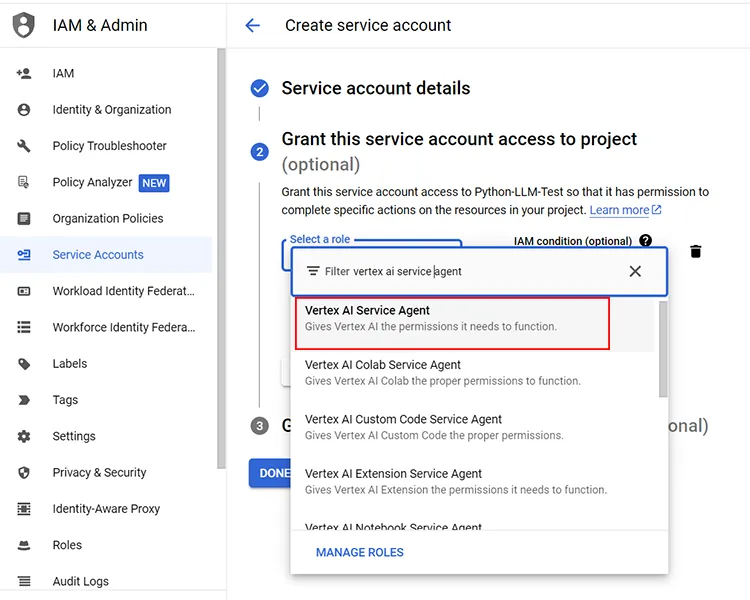

- Access the IAM & Admin Section: In the navigation menu on the Google Cloud Console, find and click on the “IAM & Admin” option, then select “Service Accounts”.

- Create a New Service Account: Click on “Create Service Account”. Fill in the required information, such as the service account name and description. Click “Create”.

- Grant Service Account Access: Assign roles to your service account that are appropriate for your use case. In this example with Vertex AI, we will select the role of “Vertex AI Service Agent”.

Click “Continue” after selecting the necessary role. - Generate a Service Account Key:

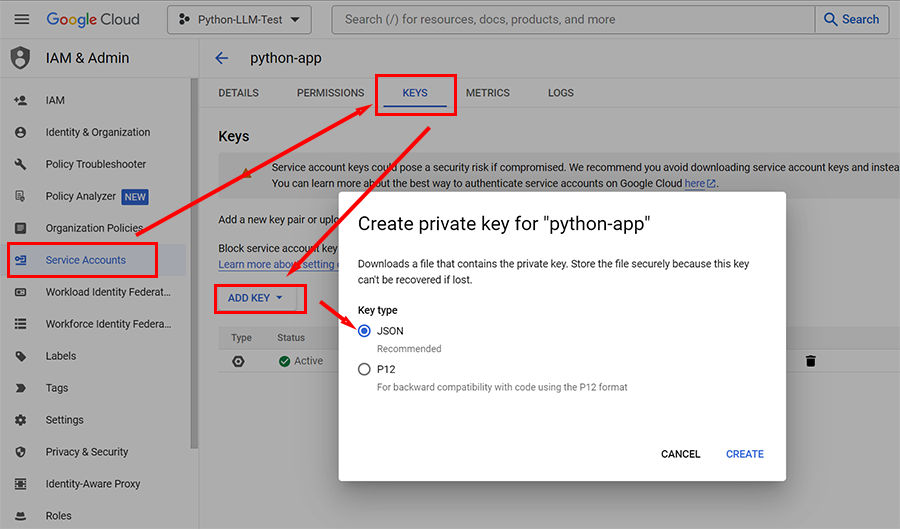

- Once the service account is created, click on it to view details.

- Go to the “Keys” tab and select “Add Key” > “Create new key”.

- Choose “JSON” as the key type and click “Create”. This action downloads a JSON file containing your service account credentials.

Step 2: Secure and Set Up Your Credentials

- Secure the JSON File: Store the downloaded JSON file securely. This file contains sensitive information that allows access to your Google Cloud resources.

Step 3: Install the Google Cloud aiplatform SDK

- Open Your Terminal: Ensure you have Python installed on your system. Open your terminal or command prompt. I am using Conda to setup a new environment for this project. Give it a name i.e. “llmgc” for Large Language Models on Google Cloud and I am using the Python version 3.11

Here is the Command for Conda:conda create -n llmgc python=3.11 - Install the SDK: Enter the command

pip install google-cloud-aiplatform.

This command downloads and installs the Vertex AI Python SDK, which is necessary for interacting with Vertex AI programmatically.

Step 4: Connect to the Vertex AI API

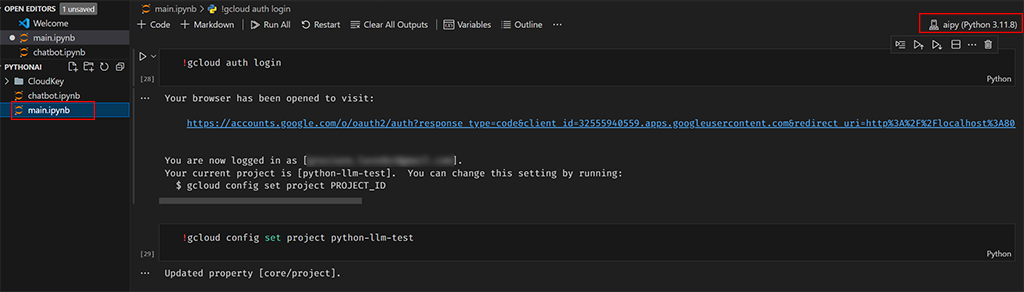

- Write Your Python Script: Open your favorite code editor or IDE and start a new Python script. I created a new Jupyter Notebook by adding a new file called main.ipynb

Also check in VS Code on the top right if your VS Code is accessing the correct Python environment that you set up before with Conda.

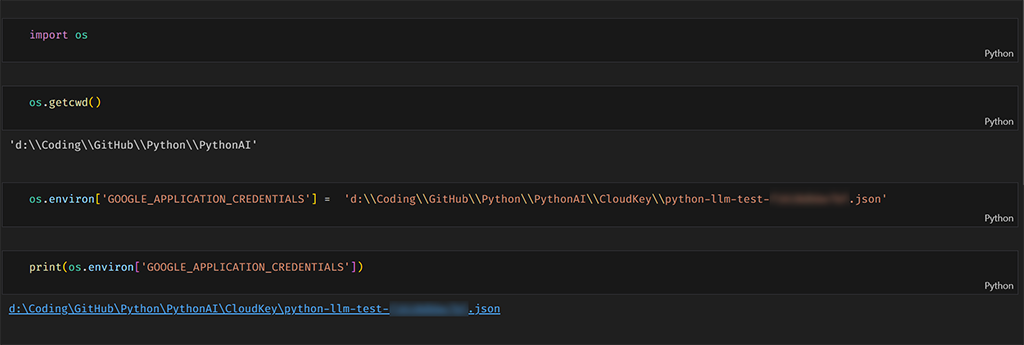

- First we import OS with the statemenet

import os, to show us the current working directory path and also to set the environment variable - Then we output the current working directory path =>

os.getcwd(), so that we know how to set the correct // or \\ in the path to our json file - with

os.environ['GOOGLE_APPLICATION_CREDENTIALS'] = '[Path to your json file].json'we set the needed environment variable to communicate with Google Could - to make sure that the environment variable is set correctly you can print this out and check if the path to the file matches

print(os.environ['GOOGLE_APPLICATION_CREDENTIALS'])

Understanding The Different Model Types

Exploring the diverse range of model types available on Google Cloud’s Vertex AI can significantly enhance your projects, whether you’re generating text, developing code, or conducting semantic searches. Below is an expanded overview of these model types, their varieties, naming conventions, and pricing strategies:

Model Types:

- Text Models: These models are designed to understand and produce natural language outputs. When provided with a prompt or question, text models generate responses that can range from answering questions to crafting stories, making them invaluable for applications requiring content creation, customer service, or any task that benefits from natural language understanding and generation.

- Code Models: Tailored for developers and programmers, code models convert natural language descriptions into code snippets in various programming languages. This capability is particularly useful for automating coding tasks, generating boilerplate code, and even providing coding assistance and debugging suggestions, thereby enhancing productivity and accuracy in software development.

- Embedding Models: Embedding models process natural language text to produce embedding vectors, which represent the text in a high-dimensional space. These vectors can be used for semantic search applications, enabling the ability to find similar documents, analyze text similarity, and perform clustering and classification tasks based on textual content. Embedding models open up possibilities for advanced search functionalities and content recommendations based on semantic understanding.

Model Varieties:

- The landscape of models includes foundational models such as PaLM and PaLM 2, which serve as the basis for more specialized models tailored to specific tasks. For instance, the Bison model is optimized for text-based tasks, offering a balance between performance and cost, while the Gecko model is geared towards simpler code-related tasks, offering efficiency and lower costs. The newest model called Gemini is also available at a higher price compared to the other available models. This is comparable to OpenAI where also the newest GPT model has the highest price per token.

Model Naming and Usage:

Google Cloud employs a systematic naming convention for its models: “<use case>-<model size>@<version number>”. This format succinctly conveys the model’s primary application (e.g., text or code), its scale, and its version, making it easier for users to select the appropriate model for their needs based on these criteria.

Model Pricing:

- Vertex AI models are priced based on usage, specifically the number of characters processed, both for inputs and outputs. This pricing model ensures users pay in proportion to their usage, making it scalable for projects of all sizes. For the latest pricing information, it’s advisable to consult the Vertex AI Pricing page, as rates and available models may evolve over time to reflect advancements in technology and market demands.

Understanding these aspects of Google Cloud’s Vertex AI models empowers users to make informed choices about the right tools for their AI-driven projects, balancing between innovation, efficiency, and cost.Understanding LLM Parameters

Prompting Techniques

To offer a deeper understanding, let’s delve into the concepts of zero-shot, one-shot, and few-shot prompting techniques used in machine learning, particularly with Large Language Models (LLMs), and then explore how Vertex AI accommodates these techniques in its API.

Zero-Shot Prompting

In zero-shot prompting, the model is expected to generate a relevant and accurate response based solely on a descriptive prompt without any previous examples or contextual information. This method tests the model’s ability to apply its pre-trained knowledge to new, unseen tasks. It’s akin to asking someone to perform a task they’ve never done before, with only the instructions for that task provided at that moment. The model uses its understanding of language and the world, inferred during its training phase, to generate a response.

Example:

Prompt: “Explain the significance of the Turing Test in artificial intelligence.”

The model, without prior examples, must use its training to understand the Turing Test’s importance and craft a coherent explanation.

One-Shot Prompting

One-shot prompting involves providing the model with a single example to guide its output. This example acts as a blueprint or template, showing the model the desired output format or style. It’s similar to teaching someone a new game by showing them one complete play-through; they then use this example to understand how to play the game themselves.

Example:

Prompt: “Convert this temperature from Celsius to Fahrenheit: 0 degrees Celsius.”

Example: “100 degrees Celsius is 212 degrees Fahrenheit.”

Based on the example provided, the model learns the conversion formula or pattern and applies it to the new input.

Few-Shot Prompting

With few-shot prompting, the model is given several examples to help it understand the task at hand. This technique allows the model to synthesize information from multiple instances, improving its ability to produce content that aligns more closely with the provided examples. This is akin to learning a concept through multiple case studies, which together offer a broader understanding of the subject.

Example:

Prompt: “Write a product description.”

Examples: Multiple examples of product descriptions, each highlighting different styles or focuses.

The model uses these examples to generate a new product description that incorporates the various styles or elements shown in the examples.

Vertex AI API and Prompting Techniques

Vertex AI’s API extends support for these prompting techniques, enabling you to harness the versatility of LLMs more effectively.

- For One-Shot and Few-Shot Prompts: The API allows you to include examples directly within the prompt for the TextGenerationModel. This means you can concatenate your examples with the actual prompt, separated by a special delimiter or simply by new lines, to guide the model’s response.

- Using the examples Parameter with InputOutputTextPair Objects for ChatModel: This approach is particularly useful for interactive models like chatbots. The examples parameter enables you to define a “history” of interaction—pairs of inputs and outputs that the model uses to understand the context of the conversation. Each InputOutputTextPair object represents a single exchange in this history, helping the model to generate responses that are consistent with the given examples.

Real-World Use Cases

Here are a few examples of how LLMs can be used in real-world applications:

- Customer Support: Automating responses to common customer inquiries.

- Content Creation: Generating articles, blog posts, and other forms of written content.

- Code Assistance: Providing coding suggestions and automating repetitive coding tasks.

Conclusion

Embarking on your journey with Large Language Models (LLMs) on Google Cloud opens up a world of possibilities for leveraging advanced AI technologies. This guide has provided you with a comprehensive walkthrough, from setting up your Google Cloud account to navigating Vertex AI Generative AI Studio and understanding the various model types and prompting techniques. By following these steps, you can seamlessly integrate sophisticated AI functionalities into your projects, enhancing your capabilities in natural language processing, code generation, and more.

Remember, the key to mastering LLMs lies in continuous experimentation and learning. Utilize the extensive documentation and support available on Google Cloud, explore real-world use cases, and don’t hesitate to dive deeper into specific areas of interest. As AI continues to evolve, staying updated with the latest advancements will ensure that you remain at the forefront of innovation.

We hope this guide has simplified the initial steps and inspired you to explore the transformative potential of LLMs on Google Cloud. Whether you are a beginner or an experienced developer, the tools and techniques discussed here will empower you to create impactful AI-driven solutions. Happy coding and exploring the limitless possibilities of Large Language Models!

Graz is a tech enthusiast with over 15 years of experience in the software industry, specializing in AI and software. With roles ranging from Coder to Product Manager, Graz has honed his skills in making complex concepts easy to understand. Graz shares his insights on AI trends and software reviews through his blog and social media.